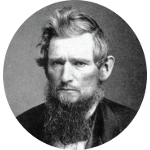

David Mimno is an Associate Professor and Chair of the Department of Information Science in the Ann S. Bowers College of Computing and Information Science at Cornell University. He holds a Ph.D. from UMass Amherst and was previously the head programmer at the Perseus Project at Tufts as well as a researcher at Princeton University. Professor Mimno’s work has been supported by the Sloan Foundation, the NEH, and the NSF.

Large Language Model FundamentalsCornell Certificate Program

Request More Info

Overview and Courses

In today's rapidly evolving digital landscape, large language models (LLMs) are revolutionizing the way we work, write, research, and make decisions. While AI breakthroughs capture headlines, the true potential lies in effectively applying these powerful tools. This certificate program is tailored for professionals eager to harness LLM technologies and implement them immediately.

Throughout this hands-on program, you will acquire practical skills to evaluate, prompt, fine-tune, and deploy LLMs to address real-world challenges. You'll delve into major platforms and tools, including the Hugging Face API, and discover how to select the right model for your needs. Techniques such as preference learning and tokenization strategy will be explored to shape model behavior, alongside foundational concepts like probabilities and attention mechanisms.

Guided by Cornell Professor David Mimno, a leading expert in computational text analysis, this program demystifies LLMs and equips you to integrate them confidently into your workflows. Whether you're in business, education, policy, or research, this program is your roadmap to making AI work for you, starting now.

You will be most successful in this program if you have had some exposure to natural language processing or machine learning. You should also be comfortable with Python programming and basic statistics.

The courses in this certificate program are required to be completed in the order that they appear.

Course list

In this course, you will discover how to work directly with some of today's most powerful large language models (LLMs). You'll start by exploring online LLM-based systems and seeing how they handle tasks ranging from creative text generation to language translation. You'll compare how models from major organizations like OpenAI, Google, and Anthropic differ in their outputs and underlying philosophies.

You will then move beyond web interfaces to identify how to find and load various foundation models through the Hugging Face hub. By mastering Python scripts that retrieve and run these models locally, you'll gain deeper control over prompt engineering and understand how different model architectures respond to your requests. Finally, you'll tie all these skills together in hands-on projects where you generate text, analyze tokenization details, and assess outputs from multiple LLMs.

- Apr 8, 2026

- Jul 1, 2026

- Sep 23, 2026

- Dec 16, 2026

In this course, you will use Python to quantify the next-word predictions of large language models (LLMs) and understand how these models assign probabilities to text. You'll compare raw scores from LLMs, transform them into probabilities, and explore uncertainty measures like entropy. You'll also build n-gram language models, handle unseen words, and interpret log probabilities to avoid numerical underflow.

By the end of this course, you will be able to evaluate entire sentences for their likelihood, implement your own model confidence checks, and decide when and how to suggest completions for real-world text applications.

You are required to have completed the following course or have equivalent experience before taking this course:

- LLM Tools, Platforms, and Prompts

- Apr 22, 2026

- Jul 15, 2026

- Oct 7, 2026

- Dec 30, 2026

In this course, you will discover how to adapt and refine large language models (LLMs) for tasks beyond their default capabilities by creating curated training sets, tweaking model parameters, and exploring cutting-edge approaches such as preference learning and low-rank adaptation (LoRA). You'll start by fine-tuning a base model using the Hugging Face API and analyzing common optimization strategies, including learning rate selection and gradient-based methods like AdaGrad and ADAM.

As you progress, you will evaluate your models with metrics that highlight accuracy, precision, and recall, then you'll extend your techniques to include pairwise preference optimization, which lets you incorporate direct user feedback into model improvements. Along the way, you'll see how instruction-tuned chatbots are built, practice customizing LLM outputs for specific tasks, and examine how to set up robust evaluation loops to measure success.

By the end of this course, you'll have a clear blueprint for building and honing specialized models that can handle diverse real-world applications.

You are required to have completed the following courses or have equivalent experience before taking this course:

- LLM Tools, Platforms, and Prompts

- Language Models and Next-Word Prediction

- May 6, 2026

- Jul 29, 2026

- Oct 21, 2026

In this course, you will analyze how large language models are constructed from diverse text sources and examine the entire model life cycle, from pretraining data collection to generating meaningful outputs. You'll explore how choices about data type, genre, and tokenization affect a model's performance, discovering how to compare real-world corpora such as Wikipedia, Reddit, and GitHub.

Through hands-on projects, you will design tokenizers, quantify text characteristics, and apply methods like byte-pair encoding to see how different preprocessing strategies shape model capabilities. You'll also investigate how models interpret context by studying keywords in context (KWIC) views and embedding-based analysis.

By the end of this course, you will have a clear understanding of how data selection and processing decisions influence the way LLMs behave, preparing you to evaluate or improve existing models.

You are required to have completed the following courses or have equivalent experience before taking this course:

- LLM Tools, Platforms, and Prompts

- Language Models and Next-Word Prediction

- Fine-Tuning LLMs

- Feb 25, 2026

- May 20, 2026

- Aug 12, 2026

- Nov 4, 2026

In this course, you will investigate the internal workings of transformer-based language models by exploring how embeddings, attention, and model architecture shape textual outputs. You'll begin by building a neural search engine that retrieves documents through vector similarity then move on to extracting token-level representations and visualizing attention patterns across different layers and heads.

As you progress, you will analyze how tokens interact with each other in a large language model (LLM), compare encoder-based architecture with decoder-based architectures, and trace how a single word's meaning can shift from input to output. By mastering techniques like plotting similarity matrices and identifying key influencers in the attention process, you'll gain insights enabling you to decode model behaviors and apply advanced strategies for more accurate, context-aware text generation.

You are required to have completed the following courses or have equivalent experience before taking this course:

- LLM Tools, Platforms, and Prompts

- Language Models and Next-Word Prediction

- Fine-Tuning LLMs

- Language Models and Language Data

- Mar 11, 2026

- Jun 3, 2026

- Aug 26, 2026

- Nov 18, 2026

Request more Info by completing the form below.

How It Works

- View slide #1

- View slide #2

- View slide #3

- View slide #4

- View slide #5

- View slide #6

- View slide #7

- View slide #8

- View slide #9

Faculty Author

Key Course Takeaways

- Set up and utilize large language models for generating accurate text responses

- Apply probability concepts to interpret and compare model predictions

- Customize language models for specific tasks using guided instructions

- Explore the influence of text datasets and tokenization on model output

- Analyze how training data sources and preparation strategies shape model capabilities

- Examine advanced model structures like attention layers and word embeddings to understand language generation

What You'll Earn

- Large Language Model Fundamentals Certificate from Cornell’s Ann S. Bowers College of Computing and Information Science

- 80 Professional Development Hours (8 CEUs)

Watch the Video

Who Should Enroll

- Engineers

- Developers

- Analysts

- Data scientists

- AI engineers

- Entrepreneurs

- Data journalists

- Product managers

- Researchers

- Policymakers

- Legal professionals

{Anytime, anywhere.}

Request Information Now by completing the form below.

Large Language Model Fundamentals

| Select Payment Method | Cost |

|---|---|

| $3,750 | |